As the days go by, there are more benchmarks than ever. It’s hard to keep track of everything HellaSwag gold DS-1000 it comes out. Also, what are they even for? Lots of cool looking names slapped on top of the benchmark to make them look cooler… Not really.

Aside from the crazy naming these benchmarks are given, they serve a very practical and careful purpose. Each tests the model on a set of tests to see how well the model performs against ideal standards. These standards are usually how well they are doing compared to a normal person.

This article will help you find out what these benchmarks are and which one is used to test which type of model and when?

General Intelligence: Can It Really Think?

These benchmarks test how well AI models mimic the thinking ability of humans.

1. MMLU – Multitask Language Understanding

The MMLU is a basic “test of general intelligence” for language models. It contains thousands of multiple choice questions from 60 subjects with four options per question covering fields such as medicine, law, mathematics and computer science.

It’s not perfect, but it’s universal. If a model skips MMLU, people immediately ask questions why? That alone says how important it is.

Used in: Universal language models (GPT, Claude, Gemini, Llama, Mistral)

Paper: https://arxiv.org/abs/2009.03300

2. HLE – Humanity’s Last Exam

HLE exists to answer a simple question: Can models handle expert-level reasoning without relying on memorization?

The benchmark brings together extremely difficult questions across mathematics, the natural sciences and the humanities. These questions are intentionally filtered to avoid web-searchable facts and common training leaks.

The benchmark question composition may be similar to MMLU, but unlike MMLU, HLE is designed to test LLM to the hilt, as shown in this performance metric:

As frontier models began to meet older benchmarks, HLE quickly became the new benchmark for pushing the limits!

Used in: Boundary Thinking Models and Research LLM (GPT-4, Claude Opus 4.5, Gemini Ultra)

Paper: https://arxiv.org/abs/2501.14249

Mathematical Reasoning: Can He Think Procedurally?

Reasoning is what makes humans special, i.e. memory and learning are used to make inferences. These benchmarks test the success rate when reasoning is performed by LLMs.

3. GSM8K — Mathematics for elementary schools (8,000 problems)

GSM8K tests whether the model can reason step by step using word problems, not just output answers. Think of a chain of thought, but instead of judging based on the end result, the whole chain is checked.

It’s simple! But extremely effective and hard to fake. That’s why it shows up in almost every assessment focused on reasoning.

Used in: Reasoning-focused language models and thought chain models (GPT-5, PaLM, LLaMA)

Paper: https://arxiv.org/abs/2110.14168

4. MATH – A mathematical data set for advanced problem solving

This benchmark raises the ceiling. The problems come from competition-style mathematics and require abstraction, symbolic manipulation, and long chains of reasoning.

The inherent difficulty of the mathematical problems helps in testing the capabilities of the model. Models that perform well on GSM8K but collapse on MATH are immediately detected.

Used in: Advanced Reasoning and Mathematics LLM (Minerva, GPT-4, DeepSeek-Math)

Paper: https://arxiv.org/abs/2103.03874

Software Engineering: Can It Replace Human Coders?

I’m kidding. These benchmarks test how well LLM produces error-free code.

5. HumanEval – Human Evaluation Benchmark for code generation

HumanEval is the most cited coding benchmark out there. It rates models based on how well they write Python functions that pass hidden unit tests. No subjective evaluation. Either the code works or it doesn’t.

If you see an encoding score on the model tab, it’s almost always one of these.

Used in: Code generation models (OpenAI Codex, CodeLLaMA, DeepSeek-Coder)

Paper: https://arxiv.org/abs/2107.03374

6. SWE-Bench – Software Engineering Benchmark

SWE-Bench tests real-world engineering, not toy problems.

Models get real GitHub issues and have to generate patches that fix them in real repositories. This benchmark is important because it reflects how people really want to use the coding models.

Used in: Software engineering and agent coding models (Devin, SWE-Agent, AutoGPT)

Paper: https://arxiv.org/abs/2310.06770

Conversational Ability: Can he behave in a humane manner?

These benchmarks test whether the models are able to handle multiple turns and how well it performs in contrast to a human.

7. MT-Bench – Multi-Turn Benchmark

MT-Bench evaluates how the models behave during several conversational turns. It tests coherence, retention of instructions, consistency of reasoning and eloquence.

The score is generated using LLM-as-a-judge, which makes MT-Bench scalable enough to become the default chat benchmark.

Used in: Chat-oriented conversation models (ChatGPT, Claude, Gemini)

Paper: https://arxiv.org/abs/2306.05685

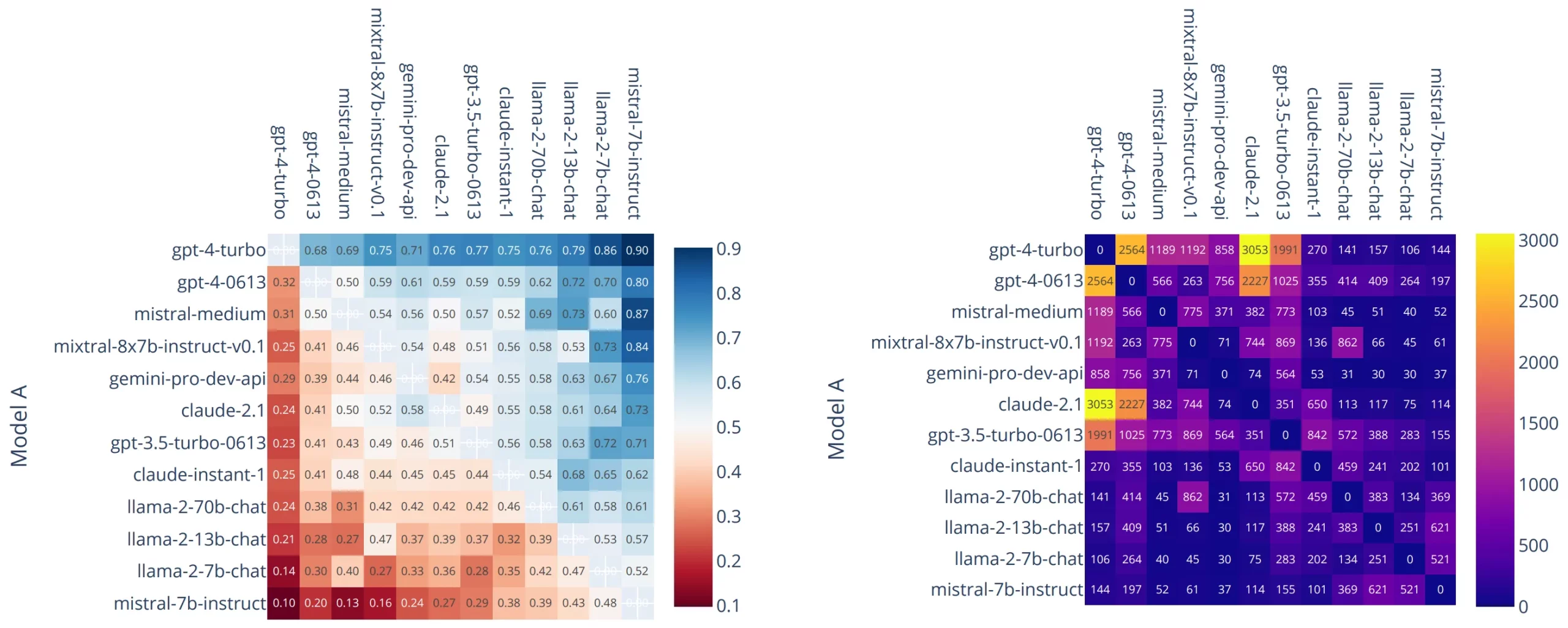

8. Chatbot Arena – benchmark of human preferences

Chatbot Arena bypasses metrics and lets people decide.

Models are compared in anonymous battles and users vote on which answer they prefer. Ratings are maintained using an Elo score.

Despite the noise, this scale carries serious weight because it reflects the user’s true scale preferences.

Used in: All major chat models for evaluating human preferences (ChatGPT, Claude, Gemini, Grok)

Paper: https://arxiv.org/abs/2403.04132

Getting Information: Can It Be Blogged?

Or more specifically: Can he find the right information when it matters?

9. BEIR – Benchmarking Information Retrieval

BEIR is a standard benchmark for evaluating search and embedding models.

It aggregates multiple datasets across domains such as QA, fact-checking, and scientific research, making it the default reference for the RAG pipeline.

Used in: Search and embedding models (OpenAI text-embedding-3, BERT, E5, GTE)

Paper: https://arxiv.org/abs/2104.08663

10. A needle in a haystack – long context recall test

This benchmark tests whether they are indeed long-context models worn out their context.

A small but critical fact is buried deep within the long document. The model must load it correctly. As popups grew, it became a status check.

Used in: Long context language models (Claude 3, GPT-4.1, Gemini 2.5)

Reference repo: https://github.com/gkamradt/LLMTest_NeedleInAHaystack

Improved benchmarks

These are just the most popular benchmarks that are used to evaluate LLMs. There are many more where they come from and even these have been replaced by improved variants of datasets like MMLU-Pro, GSM16K etc. But since you now have a good understanding of what these benchmarks represent, it would be easy to wrap up the improvements.

The above information should be used as a reference for the most commonly used LLM benchmarks.

Frequently Asked Questions

Answer: It measures how well models perform in tasks such as reasoning, coding, and retrieval compared to humans.

Answer: It is a general intelligence test that tests language models across subjects such as mathematics, law, medicine and history.

Answer: It tests whether the models can fix real GitHub issues by generating the correct code fixes.

Sign in to continue reading and enjoy content created by experts.